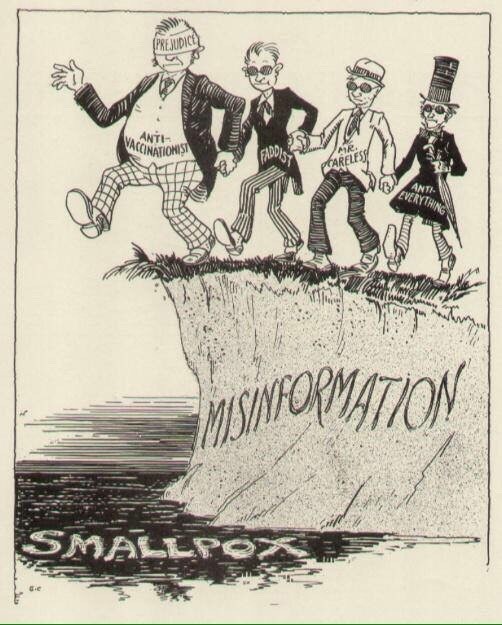

A new paper published in the top-level journal PNAS describes the challenges posed to scientific knowledge by the proliferation of online misinformation on issues like climate change, vaccines and genetically modified crops.

It describes how “profound structural shifts in the media environment” have “enabled unscrupulous actors with ulterior motives increasingly to circulate fake news, misinformation, and disinformation with the help of trolls, bots, and respondent-driven algorithms.”

Because of these structural shifts, “the views of scientists and the public are now very far apart” on issues like climate, vaccines and GMOs and only 21 percent of US adults say they have a “great deal of confidence” that scientists would act in the best interests of the public.

As a result, the paper warned, “whenever scientific findings clash with a person’s or group’s political agenda, be it conservative (as with climate science and immigration) or liberal (as with genetically modified foods and vaccination risks), scientists can expect to encounter a targeted campaign of fake news, misinformation, and disinformation in response, no matter how clearly the information is presented or how carefully and convincingly it is framed.”

The paper is written by Shanto Iyengar from Stanford University and Douglas S. Massey from Princeton, both of whom participated in a US National Academy of Sciences colloquium on the Science of Science Communication. It is available in free full-text online.

The authors point out that although it can be useful to improve the ability of scientists to communicate with the public, “we suspect that distrust in the scientific enterprise and misperceptions about the knowledge it produces increasingly have less to do with problems of communication and more to do with the ready availability of misleading and biased information in the media.”

The paper analyzes the profound structural changes that have taken place in the media landscape since 1970, including “broadcast deregulation, the repeal of the Fairness Doctrine, the rise of cable television, the advent of the internet, and the expansion of social media,” which have helped drive political polarization and partisan animosity.

Although both sides of the political can be purveyors of misinformation, the PNAS authors said very clear “deliberate efforts to undermine trust in science unfortunately come predominantly from the right of the political spectrum.”

While confidence in the scientific community among liberals has hardly changed over recent decades, the percentage of conservatives expressing a great deal of confidence in the scientific community fell from 56 percent to 36 percent between 1974 and 2016.

Partisan polarization means that more and more citizens are stuck in “echo chambers,” the authors write, and that people have become “more motivated to reject information and arguments that clash with their worldview.” When scientific evidence does clash with partisan loyalties, “it is either dismissed or distorted, thereby impeding the diffusion of scientific findings.”

Iyengar and Massey write: “The predictability of partisan beliefs and attitudes represents a classic case of motivated reasoning in which affirmation of one’s partisan identity takes precedence over dispassionate consideration of the evidence.”

The paper gives the example of a National Academy of Sciences study on immigration, which – while it predominantly showed that the economic impacts of immigration are primarily positive – was twisted in the conservative media and blogosphere as presenting the opposite.

The paper stops short of offering solutions to the increasingly challenging communications situation enveloping the scientific community. The authors suggest that organized rapid rebuttal of misinformation might be useful, but “given what research tells us about how the tribalization of US society has closed American minds—it might not be very effective.”